Articles

The Phenomenon of Mass Distraction

The Flux Phenomenon and Generative AI

In recent months, the world of artificial intelligence has witnessed an explosion in the popularity of generative AI tools, with Lora and Flux being among the most prominent. I will focus particularly on Flux due to the impact it has had. Flux is an advanced tool for creating generative images, capturing the attention of a broad spectrum of users, from tech experts to enthusiasts and creatives. This tool uses advanced AI techniques to generate images from textual descriptions (Text2Img), allowing users to create stunning visuals without needing traditional artistic or technical skills. This phenomenon has sparked a genuine internet frenzy, with a constant flow of new generated images flooding social media, forums, and specialized websites. Images of all styles have been seen, and with a quality that, even as an AI expert, photography enthusiast, and graphic designer for about 22 years, I couldn't tell at first glance if the images were AI-generated or from a Polaroid or a disposable Kodak camera from the '90s.

The appeal of Flux lies in its ability to transform simple textual descriptions into visually striking images, including text, which has democratized access to digital artistic creation for free. This has not only captured, and I'm referring to generative AI in general, the attention of those interested in digital art but has also opened the door to new forms of creative expression for a much broader audience. The ease of use and the surprising results that can be obtained with Flux have created a viral effect, where more and more people feel drawn to try the tool and share their creations online. This increase in the popularity of Flux has been largely driven by the tool's ability to generate content that is not only visually appealing but also innovative and often unexpected, capturing the imagination of millions of users worldwide.

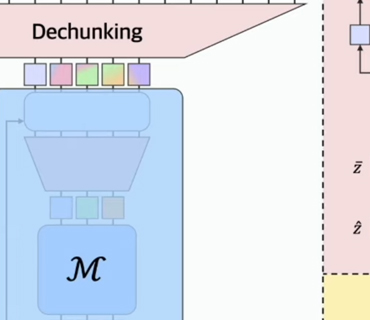

It is important to note that Flux is not an isolated phenomenon but part of a broader trend in generative AI, which has seen rapid development and adoption in recent years. Models like DALL-E, Stable Diffusion, and Midjourney have paved the way for this new wave of generative tools, and Flux has managed to stand out in this landscape for its ability to generate images with a level of detail and quality that exceeds expectations. The use of advanced deep learning techniques and models trained with large volumes of data has allowed Flux and other similar tools to evolve rapidly, improving both in terms of the accuracy of the generated images and the ability to interpret complex and abstract descriptions.

The arrival of Flux and other generative AI tools has led to a significant shift in how we interact with technology and content creation; marketing is now accessible to everyone. Whereas in the past, creating digital images required technical and artistic skills, now anyone with access to these tools can create visuals that could only have been produced by professionals before. This has led to a proliferation of content generated by ordinary users, which has also democratized the creative process but has also raised questions about the originality, value of AI-generated art, and the future of human creativity in a world increasingly dominated by artificial intelligence.

The virality of Flux has also been facilitated by the intrinsic nature of social platforms, where visual content tends to capture attention more quickly than other types of content. The images generated by Flux are often shared and spread at high speed, fueling a positive feedback loop where more people are motivated to experiment with the tool and share their results. This cycle has contributed to the rapid expansion of Flux and has set a new standard in generative image creation, highlighting AI's ability to transform not only technology but also culture and forms of expression in the digital age.

However, this trend has sparked a debate within me, as an AI expert and technology critic. I want to argue in this article that the emphasis on generative AI, particularly on tools like Flux, has diverted attention from more substantial and potentially transformative applications of AI in fields such as healthcare, education, the environment, and governance. While Flux's ability to generate images is impressive, it also highlights the human tendency to focus on the novel and spectacular, often at the expense of a deeper analysis of the implications and potential of artificial intelligence in areas that could have a much more significant impact on society.

This phenomenon reflects a broader trend in contemporary technology and culture, where innovations that capture public attention are often those that are more accessible and visually impactful, regardless of their true value or long-term impact. As Flux and other generative AI tools continue to evolve and gain popularity, it is crucial to consider not only what these technologies can do but also how they fit into the broader landscape of artificial intelligence and the impact they have on our perception of what AI can and should be in the future.

The Power of Trends in the Digital Age

In the digital age, trends play a fundamental role in how people consume information, interact with technology, and participate in global culture. Trends emerge almost spontaneously, often from small groups of users who find something novel or attractive and, through amplification on social media and other digital platforms, become mass phenomena. This process is driven by a combination of psychological, social, and technological factors that intertwine to create a feedback loop that boosts the popularity of certain content, tools, or behaviors, often beyond their intrinsic value or practical utility.

One of the main factors fueling the power of trends in the digital age is the human need for belonging and social validation. Digital platforms like Twitter, Instagram, Facebook, and TikTok have created an environment where visibility and recognition are the currency. When an individual or group begins to adopt a new trend, this action is quickly replicated by other users seeking acceptance and recognition within their digital community. This behavior is largely driven by the design of social networks, which reward content that receives more interactions with greater visibility. Thus, trends become a kind of self-perpetuation, where the initial success of content makes it more visible, which in turn increases its adoption and perpetuates its popularity.

The technological factor is also crucial in the spread of trends in the digital age. The algorithms that control content visibility on social platforms are designed to prioritize what generates more interactions, such as likes, shares, and comments. These algorithms not only amplify trends but also accelerate them, allowing something that could have taken weeks or months to become popular a few years ago to reach trend status in hours or days. This acceleration has transformed the dynamics of how trends are created and spread, making their life cycle shorter but much more intense, with immediate and often global cultural impacts.

In addition, trends in the digital age are amplified by the visual nature of social platforms. The preference for visually attractive and easily consumable content, such as images and videos, has led to trends being built around elements that can be quickly identified, replicated, and shared. This phenomenon is evident in the proliferation of memes, viral challenges, and, as discussed earlier, tools like Flux that allow for creating visual content without needing specialized skills. This type of content is not only easy to consume but also facilitates and encourages active user participation, who can contribute to the trend with their creations, thus reinforcing its virality and generating the so-called “Trend.”

Another important aspect of the power of trends in the digital age is their ability to cross cultural and geographical boundaries instantly. Unlike previous eras, where cultural trends might have been limited to specific regions or taken years to propagate globally, today, a trend can emerge in one corner of the world and, thanks to global connectivity, be adopted by millions of people in a matter of days. This has created a shared global culture where trends are not only quickly adopted but also reinterpreted and adapted by different cultures, which in turn gives them new life and meaning. This phenomenon of trend globalization has been enhanced by the ubiquity of the internet and the accessibility of digital tools, allowing anyone with a connected device to participate in creating and spreading trends.

However, the power of trends in the digital age also has a dark side. The speed with which trends spread and their ability to capture mass attention can lead to superficiality in information consumption and a disproportionate focus on the ephemeral and spectacular. In this context, trends can divert attention from more complex or important issues, prioritizing entertainment and novelty over depth and critical analysis. This phenomenon is particularly concerning in areas like technology, where the emphasis on the most eye-catching applications of artificial intelligence, such as generative image creation, can overshadow deeper discussions about AI's ethical, social, and economic impact on society.

Moreover, the fleeting nature of trends in the digital age means that what is popular today may be forgotten tomorrow, creating a fast-consumption culture where lasting value or long-term impact of a technology or content is minimized in favor of the immediate and viral. This not only affects how content is consumed but also how technologies are developed and promoted. Companies and developers may be tempted to prioritize the creation of products more likely to go viral rather than those with deeper but less immediate impact potential.

In this sense, the power of trends in the digital age not only reflects user preferences and behaviors but also shapes the decisions of content creators, technology companies, and software developers. The pressure to be at the crest of what is popular can lead to an innovation cycle that focuses more on what is virally attractive than on what is technically advanced or socially beneficial. This phenomenon is particularly visible in the field of artificial intelligence, where tools that capture public attention, like Flux, may receive disproportionate attention and development compared to other AI applications that could have a more significant but less immediate impact.

It is important to recognize that trends in the digital age are a manifestation of how technology and culture interact in a continuous cycle. Digital platforms have transformed not only how trends are created and consumed but also how they are interpreted and valued. In this new environment, the power of trends lies not only in their ability to capture attention but also in their ability to redefine what we consider important, valuable, and worthy of discussion. This power is both an opportunity and a challenge, as it offers new avenues for creativity and innovation but also raises questions about the direction these trends are leading us as a society.

The Danger of Mass Distraction

The phenomenon of mass distraction is an increasingly concerning topic in contemporary society, especially in the digital age, where the proliferation of information and content is just a click away. In an environment saturated with stimuli, it is easy for collective attention to be diverted to what is most striking, novel, or entertaining, leaving aside issues of greater importance and significance. This tendency toward mass distraction is not just a matter of individual preference but a cultural phenomenon that has profound implications for society as a whole, particularly in the context of the development and implementation of advanced technologies such as artificial intelligence.

One of the most obvious dangers of mass distraction is its ability to divert public attention and resources toward topics or products that, while novel or visually appealing, do not necessarily provide significant long-term value. In the field of artificial intelligence, this is manifested in the obsession with applications like image or video generation by AI, which, although technologically impressive, represent only a small fraction of the total potential of artificial intelligence. While generative image creation may capture the public's imagination and dominate conversation on social media, AI applications in fields such as healthcare, education, security, and sustainability receive much less attention despite their potential to have a much deeper and longer-lasting impact on society.

This distraction not only affects the public perception of what is important in the field of artificial intelligence but can also influence the direction in which research and development resources are channeled. When attention and investment are disproportionately concentrated in areas that generate high levels of media enthusiasm but do not necessarily contribute significantly to the common good, there is a risk of underfunding and underdeveloping AI applications that could address critical problems such as climate change, accessible and quality healthcare, and reducing social inequalities. This creates an imbalance in innovation, where the most eye-catching applications receive disproportionate support while those with the potential for more significant social impact are relegated to the background.

The danger of mass distraction also extends to the realm of politics and regulation. In a context where public attention is constantly diverted by the latest technological or cultural trends, there is a real risk that legislators and policymakers will not act with the urgency and seriousness necessary to address the ethical and social challenges posed by artificial intelligence. While attention is focused on AI applications that are, in many cases, superficial, critical issues such as privacy, equity, accountability, and transparency in the use of artificial intelligence may not receive the consideration they deserve. This neglect could lead to implementing laws and regulations that are not adequately informed by a deep understanding of AI's implications, which in turn could result in ineffective or harmful policies that hinder progress in crucial areas.

Mass distraction also has implications for education and technological literacy. As user attention focuses on flashy but unsubstantial applications of artificial intelligence, there is a risk that the general public will develop a superficial understanding of what AI is and how it can be used to solve complex problems. This focus on the superficial could lead to a culture where technology is seen primarily as a tool for entertainment or content creation rather than a means to address critical global problems. This could limit society's ability to make informed decisions about the use and regulation of artificial intelligence, perpetuating a cycle where decisions about technology development and implementation are made without adequate knowledge of its possibilities and risks.

It is also important to consider the psychological and social impact of mass distraction on individuals and communities. The constant exposure to content that appeals to the senses and emotions, often at the expense of deep reflection and critical thinking, can lead to a state of information overload where people feel overwhelmed and disconnected from the more serious issues facing their communities and the world at large. This overload can generate apathy or, worse, a false sense of understanding or commitment to the problems that truly matter. In the case of artificial intelligence, this could manifest in widespread support for technologies that are more spectacular than useful or in the lack of an informed public debate on the most significant and potentially disruptive AI applications.

The phenomenon of mass distraction, therefore, is not just a matter of personal preferences or passing fads. It is a systemic problem that has the potential to influence the direction of technological development, public policy formulation, education, and social awareness in ways that can be profoundly harmful if not addressed critically. In an increasingly interconnected and technology-dependent world, it is crucial that collective attention is directed toward those aspects of artificial intelligence that have the greatest potential to improve people's lives and solve the most pressing global challenges rather than allowing attention to be diverted to applications that, while interesting, do not represent the best that AI can offer.

Regulation and Innovation Stagnation

Regulation of artificial intelligence is a complex issue that has gained increasing relevance in recent years as AI-based technologies have begun to integrate into a wide range of sectors, from healthcare and education to security and the economy. However, this regulation process, while necessary, has posed significant challenges that could contribute to innovation stagnation. As governments and international organizations attempt to balance protecting citizens' rights with fostering technological innovation, an inevitable tension arises between the push to regulate and the need to allow technology to evolve without excessive restrictions.

One of the main reasons why the regulation of artificial intelligence can lead to innovation stagnation is the very nature of the technology in question. Artificial intelligence, particularly in its most advanced forms, such as deep learning and autonomous systems, is a rapidly evolving technology driven by continuous advances in hardware, software, and algorithms. Regulation, on the other hand, is inherently slower, as it must go through legislative and administrative processes that can take years. This temporal lag creates an environment where laws and regulations can quickly become obsolete as they are based on a state of technology that has already been surpassed by new innovations.

Moreover, the lack of clarity and consistency in regulations globally exacerbates this problem. Given that artificial intelligence is a global technology, with developers and users spread worldwide, the existence of disparate regulatory frameworks across different countries and regions can create significant barriers to innovation. Companies and developers operating internationally face a mosaic of regulations that are often contradictory or unclear, complicating legal compliance and potentially discouraging investment in research and development. This is particularly problematic in areas of rapid technological advancement, where regulatory uncertainty can lead to the postponement or cancellation of innovative projects for fear of future penalties or restrictions.

Regulation can also have a deterrent effect on experimentation and entrepreneurship. Startups and small innovators, who are often the engines of technological advancement, may be particularly affected by a restrictive regulatory environment. Unlike large corporations, which can afford dedicated legal teams and resources to navigate complex regulatory frameworks, startups may find themselves in a position where they simply cannot take on the risks associated with compliance with costly or confusing regulations. This can lead to a concentration of power in the hands of a few large tech companies that can influence regulations in their favor, which could, in turn, stifle competition and diversity of ideas in the field of artificial intelligence.

Another important dimension of this issue is preventive regulation, i.e., the tendency of some governments and international organizations to impose preventive restrictions on the development and use of artificial intelligence, based on concerns about possible future risks. While these concerns are not unfounded, and it is crucial to consider AI's ethical and social implications, preventive regulation can have the unintended effect of stifling innovation before emerging technologies have had the opportunity to demonstrate their value and address those risks through innovation. In other words, imposing excessive restrictions too soon may prevent the most promising technological solutions from being fully developed, depriving society of the potential benefits these technologies could offer.

It is also important to consider the role of regulation in the public perception of artificial intelligence. Laws and regulations not only dictate what is legal or illegal but also send signals to society about what is safe, reliable, and acceptable. In an environment where regulation is overly restrictive or poorly designed, a climate of distrust or fear towards artificial intelligence may develop, which in turn could inhibit the adoption of innovative technologies by businesses and consumers. This phenomenon can create a vicious cycle in which the lack of widespread adoption of AI reduces incentives for innovation, which in turn hinders the development of new applications and solutions.

Finally, innovation stagnation can also be a consequence of the lack of adaptive regulatory frameworks that can evolve along with technology. Artificial intelligence is a constantly changing field, with new advances regularly emerging that challenge existing norms. However, many regulatory systems are rigid and are not designed to quickly adapt to these changes. This can lead to a situation where the latest innovations cannot be implemented or commercialized because regulations have not been updated to reflect new technological realities. In this context, it is essential that regulators adopt more flexible and principle-based approaches that can adapt as technology evolves rather than trying to impose static rules that could quickly become obsolete.

Regulation of artificial intelligence is a crucial aspect to ensure that the development of this technology is carried out ethically and safely, but it is also a process that must be handled carefully to avoid stifling innovation. The challenge lies in finding a balance that allows experimentation and progress while protecting society from the potential risks associated with artificial intelligence. To achieve this, a regulatory approach that is both proactive and adaptive is needed, fostering innovation rather than restricting it and based on a deep and up-to-date understanding of AI's capabilities and limitations.

Critical Areas Where AI Can Transform the Future

Artificial intelligence has the potential to profoundly transform various critical sectors, addressing global challenges and offering solutions that could significantly improve the quality of life worldwide. Below, some of the key areas where AI can have a transformative impact in the future are explored, analyzing the opportunities and specific challenges of each field.

One of the most promising sectors for applying artificial intelligence is healthcare. AI has the potential to revolutionize healthcare by improving disease diagnosis, treatment, and management. AI algorithms can analyze large volumes of medical data, identifying patterns that human professionals might overlook. This is especially useful in the early detection of diseases like cancer, where early diagnosis can make the difference between life and death. Additionally, AI can personalize treatments by analyzing individual genetic and medical data, allowing doctors to design specific treatment plans for each patient, improving the effectiveness of therapies and reducing side effects. AI is also being explored for managing hospital resources, optimizing bed allocation, surgery scheduling, and staff distribution, which could improve efficiency and reduce operational costs. However, implementing AI in healthcare poses ethical and privacy challenges, especially concerning handling sensitive data and making automated decisions in patient care.

Another field where AI can have a significant impact is chemistry, particularly in discovering new materials and drugs. Traditional research and development methods in chemistry are often costly and prolonged, with many failures along the way to discovering effective compounds. AI can accelerate this process by predicting the properties of new chemical compounds before they are synthesized in the laboratory, using models that analyze data from previous molecular structures and chemical behaviors. This not only reduces the time and costs associated with developing new drugs but also increases the likelihood of success, allowing researchers to focus on the most promising compounds from the start. Additionally, AI can help design materials with specific properties for industrial applications, from manufacturing more efficient batteries to creating more resistant and sustainable materials. Despite these advantages, the widespread adoption of AI in chemistry also faces challenges, such as the need for large volumes of high-quality data and integrating these tools into existing research processes.

The environment is another area where artificial intelligence can offer crucial solutions to some of the most urgent problems facing the planet. AI can significantly contribute to the fight against climate change by optimizing the use of natural resources, predicting extreme weather events, and improving energy efficiency. For example, AI algorithms can analyze large-scale climate data to predict climate change patterns and provide vital information for decision-making in environmental policies. Additionally, AI can be used to optimize power grids, integrating renewable energy sources like solar and wind, and improving energy use efficiency in cities and communities. In agriculture, AI can help improve productivity and sustainability by predicting crop growth patterns, optimizing water and fertilizer use, and monitoring soil and plant health. However, applying AI in the environment must also be managed carefully to avoid negative impacts, such as excessive dependence on automation in natural resource management or creating inequalities in access to these technologies.

In the realm of governance, artificial intelligence has the potential to transform decision-making and public management, improving government efficiency and transparency. AI can be used to analyze large amounts of government and social data, helping policymakers identify trends, evaluate policies, and make more informed decisions. For example, AI can help predict the effects of different economic policies, allowing governments to anticipate the consequences of their decisions and adjust their strategies accordingly. Additionally, AI can improve public service delivery by automating bureaucratic processes, reducing costs, and improving accessibility and efficiency. However, using AI in governance also raises important ethical issues, such as transparency in automated decision-making, accountability for AI errors, and the risk of biases in algorithms that could perpetuate existing inequalities.

Education is another field where AI could have a transformative impact, personalizing learning and making it more accessible to a global audience. AI-based tutoring systems can adapt to individual students' needs, providing educational materials and exercises that match their knowledge level and learning style. This could be particularly beneficial in contexts where educational resources are limited, allowing students to learn at their own pace and on their terms. Additionally, AI can help educators identify areas where students are struggling, allowing for early and targeted intervention that improves educational outcomes. However, implementing AI in education also faces significant challenges, such as ensuring student data privacy, avoiding dehumanizing the educational process, and ensuring that AI does not perpetuate biases or inequalities in access to quality education.

Finally, in security, artificial intelligence can significantly improve threat prevention and response, from local crime to global security challenges. AI-based surveillance systems can analyze large volumes of data in real-time, identifying suspicious patterns and alerting authorities to potential threats before they occur. Additionally, AI can be used in cybersecurity to detect and respond to cyberattacks more quickly and efficiently than traditional methods. However, using AI in security also raises concerns about privacy, mass surveillance, and the potential for abuse by governments or private entities. It is crucial that AI implementation in security is done with a strong emphasis on ethics and protecting human rights.

These are just a few of hundreds of examples, and in each of these areas, artificial intelligence offers opportunities to advance toward a more efficient, equitable, and sustainable future.

Contrast: The Superficiality of Generative AI in Comparison

Generative artificial intelligence, particularly in its most popular applications such as creating images, music, and text, has captured the public's attention for its ability to produce impressive results with a high degree of apparent creativity. These tools, which use advanced deep learning models to generate new content from existing data, have proven fascinating to both experts and the general public due to their ability to imitate or even surpass human work in certain creative areas. However, when compared to other AI applications that address critical problems in fields such as healthcare, security, education, and the environment, it becomes clear that generative AI, as impressive as it may be, represents a superficial application of the technology compared to AI's transformative potential in these other domains.

The superficiality of generative AI can be observed in several key aspects. First, while AI-generated content is visually or audibly impressive, the utility of these products is often limited to entertainment, marketing, or digital content creation. These applications, while attractive, do not significantly address the fundamental problems facing society. For example, while a generative model can create a digital artwork that amazes with its detail or style, this creation does not tangibly contribute to improving quality of life, solving medical problems, reducing poverty, or mitigating climate change. In this sense, generative AI focuses more on content production than on creating solutions.

Moreover, generative AI tends to work on pre-existing data, and while it can combine and recontextualize information in innovative ways, it lacks the ability to generate true scientific or technological innovations. This contrasts sharply with AI applications in fields such as chemistry or biomedicine, where algorithms not only analyze data but can also discover new chemical compounds, identify potential medical therapies, or design molecules with specific properties. In these fields, AI actively expands the boundaries of human knowledge and contributes directly to advances that have a profound impact on global health and well-being.

The superficiality of generative AI also manifests in its dependence on training data that, although extensive, largely reflects the world as it is, rather than how it could be improved. For example, an image generator can create endless variations of landscapes or portraits based on existing artistic styles but cannot offer solutions to the climate crisis or design sustainable energy technologies. On the other hand, AI models applied to engineering and sustainability are designed to optimize complex systems, improve energy efficiency, and reduce environmental impact, contributing to building a more sustainable future. This contrast highlights the fundamentally different goals that each type of AI is designed to achieve.

Another dimension of this contrast is the social and ethical impact of generative AI compared to other AI applications. While it is true that generative AI has raised important questions about copyright, intellectual property, and the value of human creative work, these issues, while significant, are less pressing compared to the ethical dilemmas associated with AI in healthcare, security, or governance. For example, automated decisions in healthcare can have life-or-death consequences, and AI applications in public security can directly affect individual rights and freedoms. The superficiality of generative AI lies in that, although it generates debates about creativity and originality, it rarely confronts the deeply complex ethical issues that emerge when AI is used to make critical decisions in real life.

Finally, generative AI, despite its popularity, risks diverting resources and attention from more significant developments in artificial intelligence. The fascination with AI's creative capabilities has led to an explosion of interest and resources devoted to improving generative models like DALL-E, Midjourney, and others, while applications that could have a direct impact on society may not receive the same level of support or investment. This imbalance may perpetuate the superficiality of generative AI, relegating deeper and more transformative developments to the background in public awareness and technological investment.

The true transformations driven by artificial intelligence are occurring in fields where the technology is applied to solve complex and critical problems that directly and tangibly affect society. While the popularity of generative AI is understandable, it should be seen in context, recognizing that AI's true potential lies in its ability to contribute to meaningful progress in the world's most urgent challenges.

The Role of Experts and the Scientific Community

The role of experts and the scientific community in the development, implementation, and regulation of artificial intelligence is crucial to ensuring that this technology is used responsibly, ethically, and effectively. Given the rapid pace of advances in AI and its growing influence on multiple aspects of daily life, experts have the responsibility not only to lead innovation but also to guide public debate, educate society, and collaborate with policymakers to establish appropriate regulatory frameworks. This multifaceted role is crucial to maximizing the benefits of artificial intelligence while minimizing the associated risks.

One of the most important aspects of the role of AI experts is the continuous research and development of the technology. The scientists and engineers working in artificial intelligence are responsible for creating new algorithms, improving the accuracy of existing models, and finding innovative applications that can solve real problems. This research not only drives technological progress but also lays the foundation for applications that can have a transformative impact on society. However, this role goes beyond mere technical development. Experts must also consider the ethical implications of their work, ensuring that the innovations they produce do not perpetuate inequalities, biases, or injustices.

Alongside research and development, AI experts have a crucial role in education and outreach. As artificial intelligence integrates into more aspects of daily life, it is essential that the general public understands how this technology works, its capabilities and limitations, and how it can affect their lives. Here is where experts have a special responsibility: they must communicate AI's complexities clearly and accessibly, demystifying misconceptions and providing accurate information that enables people to make informed decisions about its use and adoption. This outreach task also includes educating future generations of scientists, engineers, and professionals in AI, ensuring they are equipped not only with the necessary technical skills but also with a solid understanding of the ethical and social aspects related to their work.

In addition, AI experts play a crucial role in advising policymakers and creating regulatory frameworks that guide the development and implementation of AI. Given the complex and rapidly evolving nature of technology, lawmakers often lack the technical expertise needed to fully understand the implications of their decisions. Experts must provide informed guidance that helps policymakers create regulations that effectively protect society without stifling innovation. This role is especially important in areas such as data privacy, accountability in automated decision-making, and preventing algorithmic discrimination. Experts can help identify emerging risks and propose proactive solutions before they materialize into more serious problems.

The scientific community also plays an important role in creating standards and best practices for AI development and use. As AI expands into new sectors, establishing norms that ensure safety, transparency, and fairness is crucial. Experts can lead the creation of these standards by collaborating with industry, government, and other stakeholders to develop guidelines that are adopted globally. This work is vital to ensuring that artificial intelligence is used consistently and fairly in different contexts and to prevent the technology from being implemented inconsistently or harmfully.

Another critical aspect of the role of experts and the scientific community is their participation in the public debate on artificial intelligence. As AI raises fundamental questions about the future of work, privacy, security, and equity, it is essential that these conversations are informed by the most up-to-date technical and scientific knowledge. Experts must actively participate in the public dialogue, contributing their expertise to help shape public opinion and policies regarding artificial intelligence. This participation also includes the responsibility to challenge erroneous ideas, correct misunderstandings, and ensure that decisions about AI use are based on solid evidence rather than unfounded assumptions or irrational fears.

AI experts must lead by example regarding ethics and social responsibility. As they develop new technologies and applications, they must do so with a deep sense of responsibility towards society. This implies not only considering the potential benefits of their work but also the risks and possible unintended consequences. Experts have a duty to act with integrity, ensuring their research and developments are conducted transparently and with a commitment to public welfare. This ethical approach is essential to maintaining public trust in artificial intelligence and ensuring that technology is used to improve people's lives rather than exacerbate inequalities or cause harm.

This role is crucial to ensuring that artificial intelligence is developed and used in a way that benefits all of humanity and contributes to a fairer and more equitable future. Unlike what is happening due to the new "Trend."

The Future of AI and the Need to Focus Attention

The future of artificial intelligence is one of the most crucial and complex topics of our time, with the potential to transform virtually every aspect of society. However, for AI to fully realize its promise, it is vital that the attention of researchers, developers, lawmakers, and the general public be focused on the areas that truly matter. This focus requires a clear understanding of the capabilities and limitations of artificial intelligence, as well as a firm commitment to the ethical and social principles that should guide its development.

First, it is essential to recognize that artificial intelligence is not a monolithic technology but a diverse set of tools and techniques that can be applied in various contexts. From generative content creation to optimizing industrial processes, AI has such a broad range of applications that its true impact will largely depend on how and where it is used. However, there is a real danger that enthusiasm for the more eye-catching applications, such as image generation or automating routine tasks, will displace attention from areas where AI could have a much more significant impact. While generative image creation is impressive, it does not address the fundamental problems facing humanity, such as climate change, global inequality, or chronic diseases. Therefore, it is crucial that both resources and public attention be directed toward AI applications that can change the world for the better.

The responsibility for directing the development of artificial intelligence toward areas of greatest impact lies largely with the scientific community and AI experts. As AI development advances, it is essential that experts work closely with policymakers, international organizations, and other stakeholders to ensure that resources are directed efficiently and ethically toward areas of greatest need. This includes not only the research and development of new technologies but also public education and the creation of a regulatory framework that promotes the responsible and equitable use of artificial intelligence.

The need to focus attention also extends to regulating artificial intelligence. The laws and policies that guide AI's development and use must be designed to foster innovation in critical areas such as healthcare, education, the environment, and security while mitigating the risks associated with its misuse. This requires a proactive approach, where lawmakers stay informed about the latest technological advances and work closely with experts to create a regulatory framework that is flexible, adaptive, and focused on society's well-being. A well-designed regulatory framework can be a catalyst for innovation, encouraging developers to work on applications that have a positive long-term impact.

Another crucial area to focus on is education and training. As artificial intelligence is increasingly integrated into daily life and the work environment, it is essential that current workers and future generations are adequately prepared to interact effectively with this technology. This includes not only technical skills but also a broader understanding of AI's ethical and social implications. Educational institutions and training programs must adapt their curricula to reflect the importance of artificial intelligence, ensuring that students are equipped to contribute positively to a world increasingly influenced by technology. Education should be geared not only toward creating technical skills but also toward developing informed citizens aware of the challenges and opportunities that AI presents.

Society's role is also fundamental. It is vital that the public be well-informed about what artificial intelligence is, how it is used, and its potential benefits and risks. Public understanding of AI can significantly influence its development direction, as social pressure and market demand can affect research and development priorities. The media and educational platforms have a great responsibility in providing accurate and accessible information, avoiding sensationalism and promoting an informed dialogue about the future of artificial intelligence.

It is important to remember that the development of artificial intelligence is not an end in itself but a means to achieve broader goals that benefit humanity. As we move towards a future where AI will play an increasingly central role, we must ensure that this technology is used to solve the most pressing problems of our time rather than distracting us with superficial applications that, while impressive, do not significantly contribute to global well-being. The future of artificial intelligence will depend largely on our ability to focus our attention on the areas that truly matter and our commitment to ethical, responsible, and human-centered development. Only through a concerted and directed effort can we ensure that artificial intelligence becomes a positive force for humanity, helping us build a fairer, more equitable, and sustainable future.

I summarize everything in one sentence:

"It is sickening to see how humanity, once again, gets distracted by its new 'toy' instead of focusing on and fostering the true social value that the potential of artificial intelligence has."

The Author

Juan García

Juan García is an Artificial Intelligence Expert, Author, and Educator with over 25 years of professional experience in Industrual Businesses. He advises companies across Europe on AI Strategy and Project Implementation and is the Founder of DEEP ATHENA and SMART &PRO AI. Certified by IBM as an Advanced Machine Learning Specialist, AI Manager and Professional Trainer, Juan has written several acclaimed Books on AI, Machine Learning, Big Data, and Data Strategy. His Work focuses on making complex AI Topics accessible and practical for Professionals, Leaders, and Students alike.

More